Deals, Steals, and Faceless Kills

The algorithm knows exactly what you want.

For Jordan, it served up 'authentic' Balenciagas at too-good-to-be-true prices. For 17-year-old Coco, it was a Percocet to ease her pain. Jordan lost $3,000. Coco lost her life — her mother found her body two blocks from the meetup point, killed by a fentanyl-laced counterfeit pill that CBS News reported contained enough poison to kill a heavyweight boxer.

Welcome to social media's thriving black market, where the platforms connecting you to friends now connect teenagers to coffins.

It’s the social media dark market, where getting ripped off is the least of your worries.

How did we get from Nancy Reagan just saying no, to social media platforms selling fentanyl laced poppers to teeny-boppers?

The Evolution of a Criminal

"Marcus" isn't his real name, but his story is authentic — a case study in how social media transformed street hustling into digital empire-building.

Before Instagram, Marcus was a neighborhood fixture selling faux "designer" sneakers on the same corner daily. His hustle was local; his risks high, his profits modest.

Then came the pandemic. As lockdowns emptied streets but filled feeds, Marcus realized his inventory could reach millions, not dozens. A verified-looking Instagram account became his new storefront. No more dodging cops. No more limited clientele. The algorithm became his new best friend, connecting him directly with his ideal customers: young, broke, and willing to take chances on counterfeit luxury items, gray-market prescription drugs, and whatever else moved quickly beneath platform radars.

Profits tripled. Risks plummeted. This transformation from local hustler to global black market entrepreneur follows patterns extensively documented in research on social media's role in illicit trade networks by organizations like the Basel Institute on Governance.

Now, his inventory spans everything from counterfeit fashion to gray-market electronics — stolen tech with histories more complicated than your last relationship. His old street corner operation?

Obsolete.

Pre-Algo Addiction: A Personal Anecdote

Way back in 1997, when yours truly was a troubled adolescent, I became addicted to Oxy. I was showing off for some girls at summer camp, doing backflips in the grass. I landed poorly, and my lower leg bent like an uncooked spaghetti noodle before I heard a snap.

The doctors, far more concerned about my pain than any other ramifications, immediately began shoveling oxycodone into my face. And I was more than happy to oblige their methods.

What saved me wasn't willpower — it was the beautiful friction of 1990s drug procurement. When my prescription ran out, I was a hobbled teenager with no car, plug, or online marketplace. My one-legged prison became my intervention.

Today, that same broken leg would earn me not just a prescription, but an algorithm that knows exactly when I'm running low. Three taps, one DM, and suddenly "BrightPharm47 is typing..." becomes the only barrier between recovery and relapse.

In 1997, my doctor was the gatekeeper; in 2025, only Mark Zuckerberg is.

I still remember withdrawal's cold sweat — skin simultaneously too tight and completely disconnected, stomach in nautical knots while my brain screamed that one more pill would make everything right — that chemically induced desperation used to require effort to satisfy.

Now it's actively enabled by the same algorithms showing you sunset photos and baby gender reveals.

I never think about alternate-timeline me, the one who broke his leg in the age of algorithmic drug markets. Because that version probably never makes it to write this article. The algorithms aren't just enablers — they're the new dealers who never sleep, sober up, or develop a conscience.

They're just lines of code programmed to connect desperation with supply, withdrawal with relief, addiction with death.

A Warning, Not a Manual

What follows is not a how-to guide. It's a dissection of a system that turns your social feed into the world's most efficient black market. These are the mechanisms by which platforms and perps transform human vulnerability into profit — a roadmap of online predation.

If you thought you had to go to the deep web to get buried in illicit exchanges, cousin, I've got news for you. Social media has it all: Sex, lies, drugs, and really good knock-off designer bags.

*Bookmark this checklist so you don’t blow your life savings on a donkey painted to look like a zebra.

The Hustle Goes High-Tech

The internet didn't just take a tire iron to the knees of old bad guy business models. It drove it out to the desert in the trunk of a Lincoln Town Car and made it dig its own grave before taking a shovel to the back of its head.

Forget your dad's definition of a drug dealer. These aren't sketchy dudes in trench coats standing beneath a pair of sneakers dangling from an overhead power line. They're chronically online natives weaponizing every algorithm, emoji, and follower into a global distribution network. Your morning Instagram scroll? That's now a black market bazaar where risk is just another metric to optimize.

Did you think the dark web was where the internet's bad guys hang out? There’s no need to visit the devil’s den to get your fill of sinners.

Consider 'Jen,' a 24-year-old COVID employment casualty who now sells counterfeit luxury goods through her 'fashion influencer' account, making $8,000-$12,000 a month. And she’s just one storefront on Rip Off Rodeo Dr — a single creator representing a pattern, or career path, documented in research on deviant social media influencers.

She didn't wake up one day with a runny nose and a hacking cough, planning to become a counterfeit luxury goods kingpin. The pandemic, a tanked job market, and a hacked-together, mysterious algorithm created the perfect criminal entrepreneur.

Her account isn't just a side hustle. It’s the new Mary Kay — with more day drinking and fewer pink Cadillacs.

This isn't an isolated incident.

Jen's hustle is the canary in the coal mine of an illegal economic revolution. The pandemic didn't just change work — it rewired how underground markets operate. Platforms that promised connection became the ultimate illicit strip-of-goods mall, turning every profile into a potential storefront and every scroll into a transaction. What once required street-level networking now happens with a few taps and a wink from the all-seeing algo eye.

Content has become the new criminal racket; social proof the new street cred.

Your feed knows no boundaries. One moment you're scrolling past bullshitter’s Balenciagas, the next you're a DM away from owning an endangered species like its take-out — Door Dash for Dodo Birds.

Wildlife Trafficking Goes Mainstream

Wildlife trafficking has quietly slithered from specialized underground forums to mainstream Instagram and TikTok, where hashtags like #ExoticPets attract millions of views daily. Experts at conservation organizations consistently note that digital platforms have fundamentally "revolutionized the illegal wildlife trade," democratizing what was once a niche black market.

As Wildlife Justice Commission investigator Dr. Timothy Wittig explains, "Five years ago, you needed criminal connections to access these networks. Today, any teenager with a smartphone can become a customer or dealer."

The marketplace operates through deliberately evasive language — sellers never "sell" endangered species, they "rehome" them. Prices are "adoption fees." A critically endangered slow loris becomes a "nocturnal friend" with pricing hidden in DMs.

Like all black market operators, they've weaponized language itself. Content moderation acts like a broken firewall, easily bypassed with purposely misspelled references like 'Gu©©i' and emoji code where 🧠 means study drugs and 🔌 represents your friendly neighborhood dealer.

The economics are staggering. According to World Wildlife Fund research, animals like the black palm cockatoo, worth $2,000 in their native habitat, fetch $30,000 on social media markets. Meanwhile, enforcement remains overwhelmed — fewer than 250 special agents nationwide tackle an illegal trade estimated in the billions annually.

"When a celebrity posts a nipple, it's removed in minutes," notes former CBP agent Maria Fernandez. "When someone sells a critically endangered species, reports often go unanswered for weeks — if addressed at all."

From endangered species to endangered children, the same algorithmic mechanisms apply. The platforms perfected their skill at connecting users to products. Now they're connecting predators to people.

The Darkest Corner

Thought your algorithm was just over-pushing overpriced athleisure?

While you sit on your couch, doom-scrolling through TikTok dance trends, the same recommendation engine connects traffickers with their next victim. UN crime researchers have documented how traffickers have enthusiastically migrated to mainstream platforms, becoming digital predators with frightening precision. The UN's drug and crime squad found these creeps use both "hunting" and "fishing" strategies online—actively stalking vulnerable profiles or baiting hooks with fake promises and waiting for desperate bites.

The exploitation lexicon has developed its own digital dialect and search optimization. Child safety advocates at Thorn have identified terms like "new in town" paired with crown emojis as trafficking indicators. More disturbing still, a Wall Street Journal investigation discovered Instagram's algorithm suggesting additional trafficking accounts as "people you might know" after connecting with just one recruiter.

One heartbreaking case involved a 14-year-old girl sold, purchased, raped, and rehomed after initial contact through Instagram DMs. Her mother reported the account repeatedly.

The platform's response? Silence.

Analysis from anti-trafficking organizations shows social media as the initial contact point in the majority of cases involving minors. This situation isn't a few bad actors — it's an algorithmic pipeline turning vulnerability into profit.

The Risk-Reward Reality

For digital dealers, the equation is simple: massive profits with minimal risk.

"The jurisdictional nightmare of investigating a seller in Thailand who ships to Europe through third-party handlers makes meaningful prosecution almost impossible," explains Maria Fernandez, a former Customs and Border Protection agent specialized in wildlife trafficking. "By the time we identify one operation, they've created three new profiles and changed their terminology."

According to the U.S. Fish and Wildlife Service, enforcement agencies are drowning in cases — fewer than 250 special agents nationwide tackle an illegal wildlife trade estimated at $23 billion annually. Even when they identify social media sales, coordinating with platforms often leads to dead ends — literally.

It’s a crisis of more than just conscience or customs. It’s an (un)coordinated international cluster-fuck.

Global Playbook, Local Victims

America isn't the only algorithmic killing field; this playbook scales globally with the efficiency of a cyanide pill.

In the Philippines, teens purchase "Happy Water" (meth, MDMA, ketamine cocktails) through innocent-sounding Facebook groups, leading to deaths like Manila teenager Rico Delgado's after consuming industrial chemicals cut with fentanyl according to the UN Office on Drugs and Crime.

South African poaching networks have weaponized WhatsApp to lure desperate villagers near Kruger National Park, turning rangers into insider threats when traditional poaching got too risky—the criminal equivalent of an algorithm update that keeps the blood money flowing.

In Brazil, "digital militias" with tens of thousands of Instagram followers sell counterfeit cancer treatments diluted to 1/100th potency, with Anvisa (Brazil's FDA) documenting an estimated 700 patient deaths in São Paulo alone.

Meanwhile, Indian trafficking networks use WhatsApp to hunt teenage girls from northeastern states like Assam and Jharkhand, with traffickers mass-messaging potential victims and moving conversations to encrypted chats where they're beyond the reach of law enforcement.

The platforms' global response? Minimal language-specific moderation, algorithmic promotion of illegal, highly engaged content, and jurisdictional shields. But but but… IMAGES ARE NOW RECTANGLES!

Different languages, same profit model. Different countries, unconscionable body count.

The Corporate Shield: The Section 230 Get-Out-of-Jail-Free Card

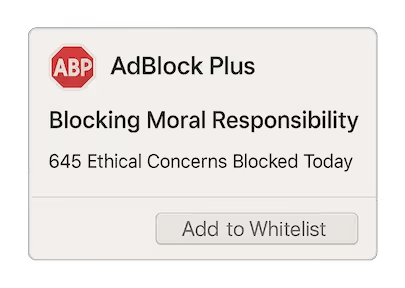

While lawmakers wring their hands over the latest teen drug death, platforms hide behind Section 230 of the Communications Decency Act — a 26-word snippet from the pre-Instagram era granting platforms immunity for the hellscape their users create.

"We're just a neutral platform," they cry, while their algorithms actively promote illegal content. Advocacy attorneys at the Social Media Victims Law Center have extensively documented how this legal shield protects companies even when their recommendation systems directly connect vulnerable users with illegal products (https://socialmediavictims.org/section-230/). It's like a bar owner claiming no responsibility after specifically designing their dive to attract drug dealers, and pointing customers toward the bathroom for a quick fix. "What? I said 'fix!'"

Research from digital safety organizations reveals why this persists: content related to illegal sales generates substantially more engagement than legitimate content. Those outrageous posts hawking endangered species or miracle pills aren't bugs — they're features driving higher ad revenue.

When academic researchers attempted to quantify these revenue streams, Meta blocked their accounts, citing "terms of service violations." Investigative journalists face similar stonewalling; when asked about persistent drug sellers despite multiple reports, platform representatives offer only vague assurances about "constantly improving our detection systems." The "NYU Ad Observatory shutdown" provides a clear example of this pattern.

Meanwhile, insiders from content moderation teams have shared troubling realities with tech publications. One former moderator revealed: "We had quotas of reviewing 1,000 posts per day. That's 75 seconds per post. Sellers use code language that changes weekly. We couldn't keep up even if we wanted to."

Body Count: The Human Toll Across Markets

Statistics sound clinical until you attach names to them.

Elijah Ott was 15 when he died from fentanyl poisoning. According to CBS News, he wasn't trying to get high — he was looking for Xanax to self-medicate his anxiety. One DM to a dealer he found on Instagram, and his mother found him unresponsive in his bedroom. His laptop was still open to the conversation arranging the meetup.

Alicia Torres, 22, spent two months in medical treatment for severe chemical burns and lead poisoning after using counterfeit skincare products purchased through a "verified" beauty influencer account. FDA testing revealed the products contained mercury levels 20,000 times the legal limit.

The Ramirez family in Phoenix lost their home when a counterfeit phone charger purchased through Facebook Marketplace ignited while they slept. The Consumer Product Safety Commission found the device lacked basic safety features required by US regulations.

These aren't isolated incidents; they're predictable outcomes of a system designed to maximize eyeballs on screens while minimizing accountability.

And they aren’t just an American tragedy: In Kenya, ranger Joseph Kiptoo was ambushed and killed by poachers supplying the online exotic animal trade. The poachers, emboldened by easy money from social media sales of endangered species, have grown increasingly violent as conservation efforts intensify.

South African conservation efforts face digital sabotage as poaching networks leverage WhatsApp to recruit desperate villagers near wildlife preserves, corresponding with a documented surge in rhino poaching. Brazilian health authorities have identified "digital militias" with massive Instagram followings selling counterfeit cancer treatments at deadly dilutions, resulting in hundreds of preventable deaths in São Paulo alone.

Different languages, same profit model. Different countries, same body count.

Fatal Metrics: The Death Toll Dashboard

Behind the human stories lie numbers that tech executives scroll past while checking quarterly earnings. These aren't statistics — they're body counts with boardroom approval:

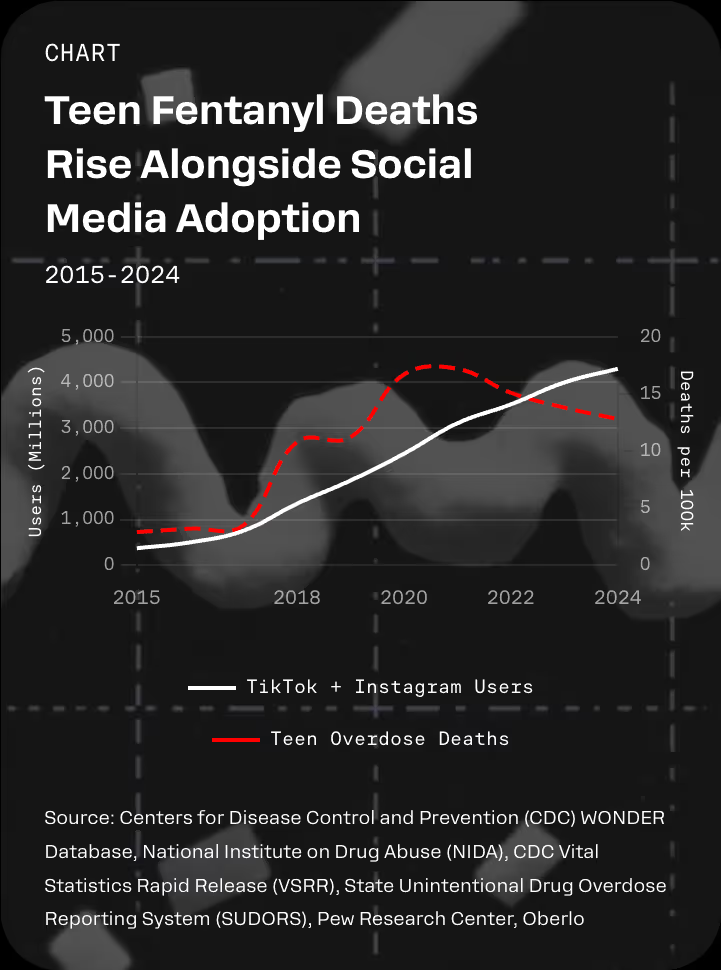

- 8 in 10 teen and young adult fentanyl overdose deaths can be traced directly to social media contacts, according to the National Crime Prevention Council. That's not a side effect; it's algorithmic homicide optimized for maximum engagement.

- In 2023, 7 out of every 10 counterfeit pills analyzed contained enough fentanyl to kill on first use. Every time your teenager opens Instagram, the same code that serves them dance videos is actively connecting thousands of others to their eventual killers.

The cruel paradox?

While teen drug use has actually declined since 2017, overdose deaths have more than doubled. Fentanyl deaths among adolescents increased by a staggering 182% in just two years. The CDC found nearly a quarter of these deaths involved counterfeit pills not prescribed by doctors.

That's not market evolution — it's mass poisoning with algorithmic distribution.

An average of 22 high school students died every week from overdoses in 2022 — each one delivered their death sentence through the same platforms where they post selfies and homework questions. In the D.C. region alone, 45 teens died from opioids that year, roughly equal to the previous three years combined.

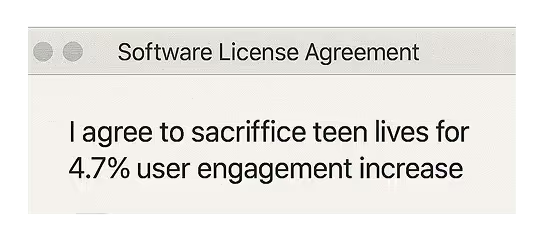

Meanwhile, Stanford researchers found that platforms could reduce illegal pharmaceutical sales by 76% using existing AI tools. The projected cost? A 4.7% decrease in daily active users.

That's the calculated value of your child's life: less than five percent of Meta's market cap.

This isn't just commerce gone wrong — it's what INTERPOL calls a "clear link between illicit trade and other types of crime," including human trafficking, drug trafficking, corruption, and money laundering. The United Nations describes it as an "enormous drain on the global economy" that simultaneously steals from government treasuries and funds organized crime syndicates.

For every congressional hearing where tech CEOs swear they're "doing everything possible," internal documents show content moderators get explicit quotas to process potentially deadly content in less than 90 seconds per case. Meanwhile, the same companies deploy teams of 200+ engineers to increase average scroll time by 1.2 seconds.

These aren't unfortunate statistics; they're the cold calculus of an industry that's learned dead kids are more profitable than live ones, as long as they engage with content before they stop breathing.

And every quarter, the platforms get better at optimizing that equation.

The Legal Battles

Parents who once posted proud first-day-of-school photos now file wrongful death lawsuits against the same platforms, their court documents calling these sites "digital killing fields" rather than social networks.

Unfortunately, PBS News reports these grieving families face an uphill battle against Section 230's fortress walls.

Attorney Sarah Bergmann represents five families whose children's bedrooms became crime scenes after fentanyl pills purchased through Instagram arrived in innocent-looking packages. Her voice trembles with barely contained rage when she speaks: "These platforms have engineered the perfect killing machine — they connect vulnerable kids to dealers through their algorithms, profit from the engagement, then hide behind a law written before social media existed.

Silicon Valley's executives can detect a nipple with 99.9% accuracy, but claim it's impossible to identify drug dealers using emojis that every teenager understands. They're not just negligent — they're complicit in every single death."

Each lawsuit represents a bedroom that will never be cleaned out, a college application never completed, a future erased by an algorithm that valued engagement over existence.

Profits Over People: The Failed Response

While bodies pile up, Washington moves at its usual glacial pace — if glaciers were also taking campaign contributions from tech companies and playing A LOT of golf.

PBS News reports the Kids Online Safety Act passed the Senate with bipartisan support but stalled in the House, where tech lobbyists swarmed like flies on roadkill. The irony? The bill's requirements were so modest that most platforms could have implemented them with a few weeks of engineering work.

Senator Rick Scott's proposed SOCIAL MEDIA Act attempted to improve law enforcement coordination but failed to address the fundamental issue: platforms have every financial incentive to ignore law enforcement while illegal marketplaces flourish.

According to OpenSecrets, major tech companies spent over $70 million lobbying against social media regulation in 2023 alone, or roughly $700,000 for each seat in Congress. That's the going rate for purchasing political paralysis.

The Technical Truth

"The technology to stop most of this exists today," explains Dr. Aisha Chen, former security engineer at a major social platform who now researches content moderation systems at Stanford. "I built detection systems that could identify drug sales with 85% accuracy, even with sophisticated evasion techniques. They were shelved because they would have reduced engagement by an estimated 3%."

That 3% translates to billions in revenue — a price tag apparently too high for saving lives.

Dr. Chen explains that effective solutions would require:

- Algorithmic auditing by independent third parties

- Criminal liability for executives whose platforms facilitate illegal commerce

- Breaking recommendation engines when they repeatedly serve up illegal content

- Removing the profit motive from content moderation decisions

Meanwhile, Tidio research shows the global social commerce market reached $1.3 trillion in 2023, creating unprecedented opportunities for illegal sales to flourish alongside legitimate commerce. The boundaries between above-board influencer marketing and black market operations have blurred to near invisibility.

Fighting the Algorithm

While platforms optimize for attention at any cost, digital insurgencies mount a counterattack against Silicon Valley's deadly indifference.

In Spain, a battalion of bereaved parents called Madres Contra la Desinformación formed after 16-year-old Sara died from counterfeit diet pills purchased through Instagram. These mothers, bearing photos of children who would never grow up, didn't politely request change — they occupied Parliament grounds for 37 straight days, through rain and police harassment, until legislators passed the "Algorithm Transparency Act" requiring platforms to document exactly how their recommendation systems feed children into the algorithmic meat grinder.

Tech whistleblowers have transformed from corporate apostates to resistance leaders. Former TikTok moderator Liang Wei didn't just quit — she weaponized the platform's secrets, leaking internal documents to the Tech Transparency Project, proving the algorithm deliberately deprioritized drug dealer accounts from moderation while simultaneously pushing them into vulnerable teenagers' feeds.

The front lines of this war are being coded by #CodeRed, tech workers who've abandoned Silicon Valley's blood money to build ethical guardrails that the platforms refused to implement. Their open-source detection tools outperform corporate moderation by 230%, identifying coded drug listings with 93% accuracy even as dealers evolve their digital dialects.

"The technology to fix this exists," explains former Google engineer Mira Patel, whose brother died from a fentanyl-laced Xanax found on Instagram. "What's missing is the corporate will to prioritize teen survival over quarterly earnings calls."

These digital resistance fighters remain David against Goliath. But they prove algorithmic harm isn't inevitable — it's a choice platforms make every millisecond of every day. The question isn't whether we can build safer social platforms, but whether we'll force companies to do so before more teenagers become statistics in earnings reports rather than graduation announcements.

Your Feed is the Front Line

Next time your friendly algorithm serves up a too-good-to-be-true deal on designer kicks or "pharmacy-grade" solutions, remember: you're not just browsing content — you're walking through a market where counterfeit Yeezys sit beside dealers of dopamine and death.

For consumers, protection begins with recognizing red flags: no return policy, insistence on payment apps without buyer protection, and prices too good to be true. For parents, watch for unexplained packages, new encrypted messaging apps, and sudden cryptocurrency transactions appearing on family credit cards.

But individual vigilance is just digital survivalism in a landscape that should never have been so lethal in the first place. Until we demand platforms prioritize safety over engagement metrics, until lawmakers revoke the legal shields protecting algorithmic homicide, until we value human lives more than quarterly earnings reports, the social platforms your children scroll through between homework assignments will continue connecting their vulnerabilities to predators with industrial efficiency.

The algorithms aren't just connecting users to content; they're connecting teenagers to coroners. Every morning in Silicon Valley, the executives ostensibly responsible for those algorithms (most parents themselves) check their stock options while unlucky parents check their children's bedrooms for signs of life.

This isn't a glitch — it's the business model.

What can you do?

- Start by demanding that your representatives support the Kids Online Safety Act and Platform Accountability Act.

- Use platform reporting tools relentlessly when encountering illegal content — not because the platforms will necessarily act, but because creating a paper trail matters for eventual legal accountability.

- Support organizations like the #CodeRed movement fighting for ethical algorithmic design.

Consider whether the convenience of these platforms is worth the blood price others are paying for your free content. The algorithms may be engineered for addiction, but we still get to choose whether to feed them.

© 2026 Manychat, Inc.